Using AI to prevent cheating

AI Integration

Making Coursera assessments harder to cheat on so students can earn credit in emerging fields

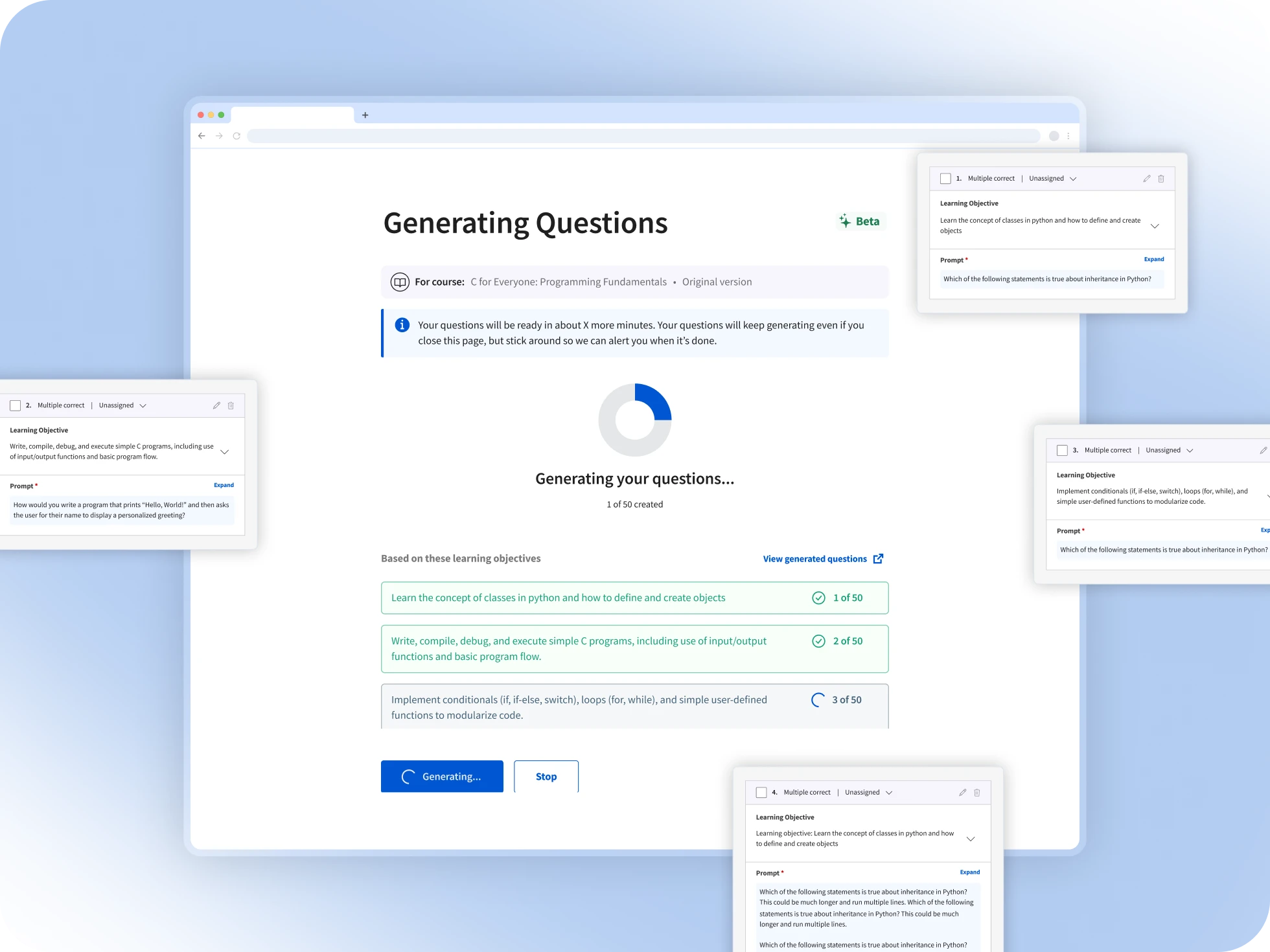

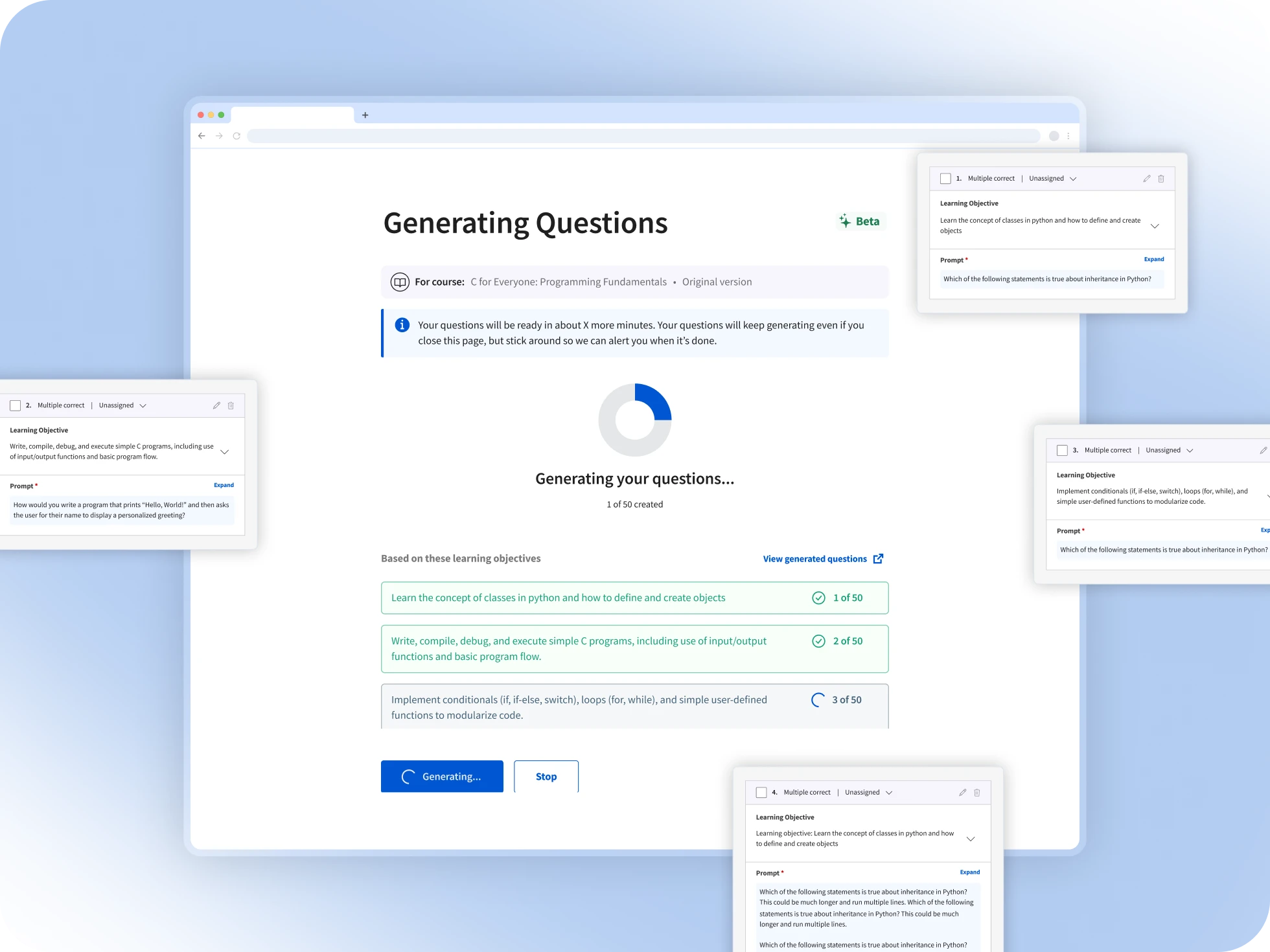

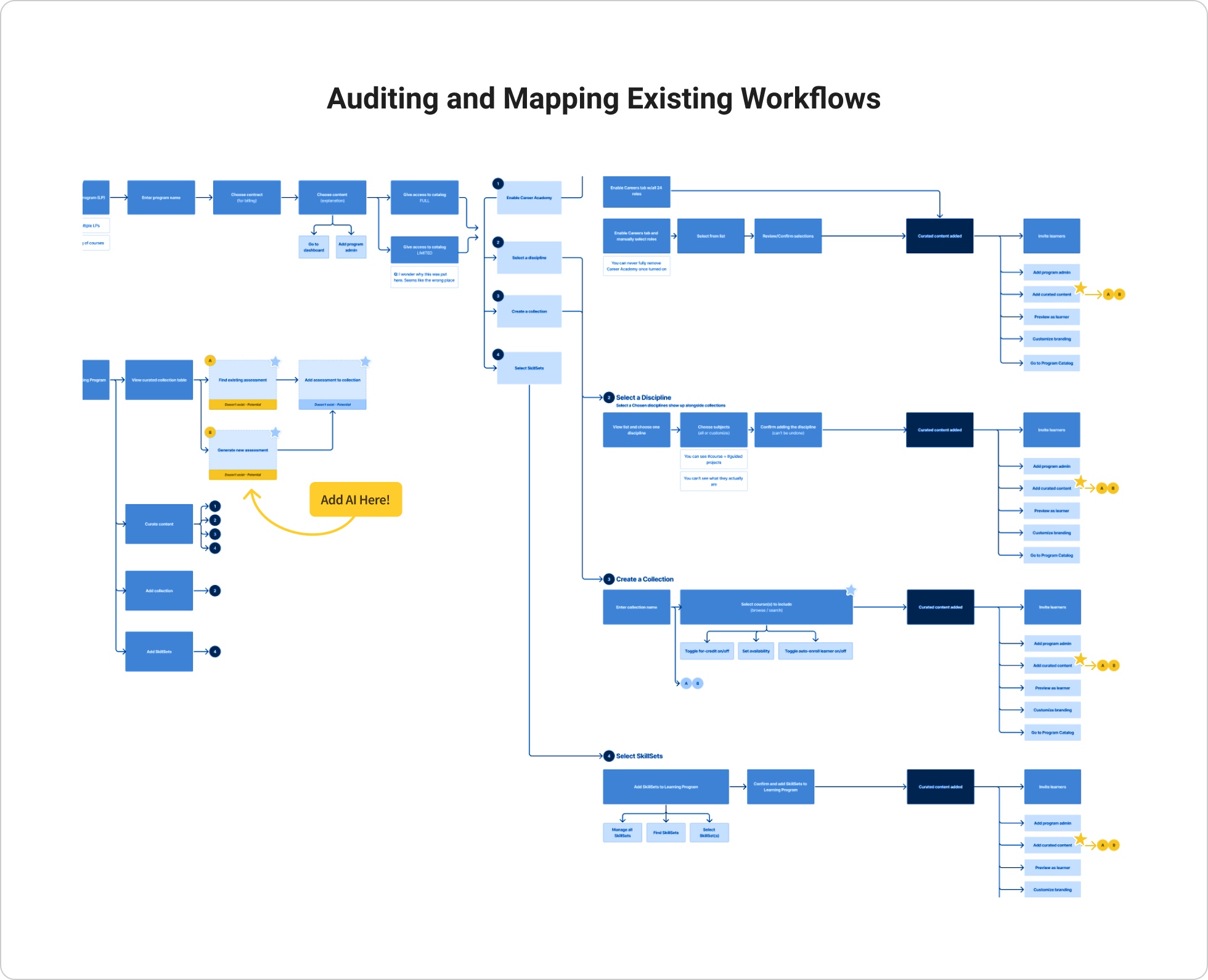

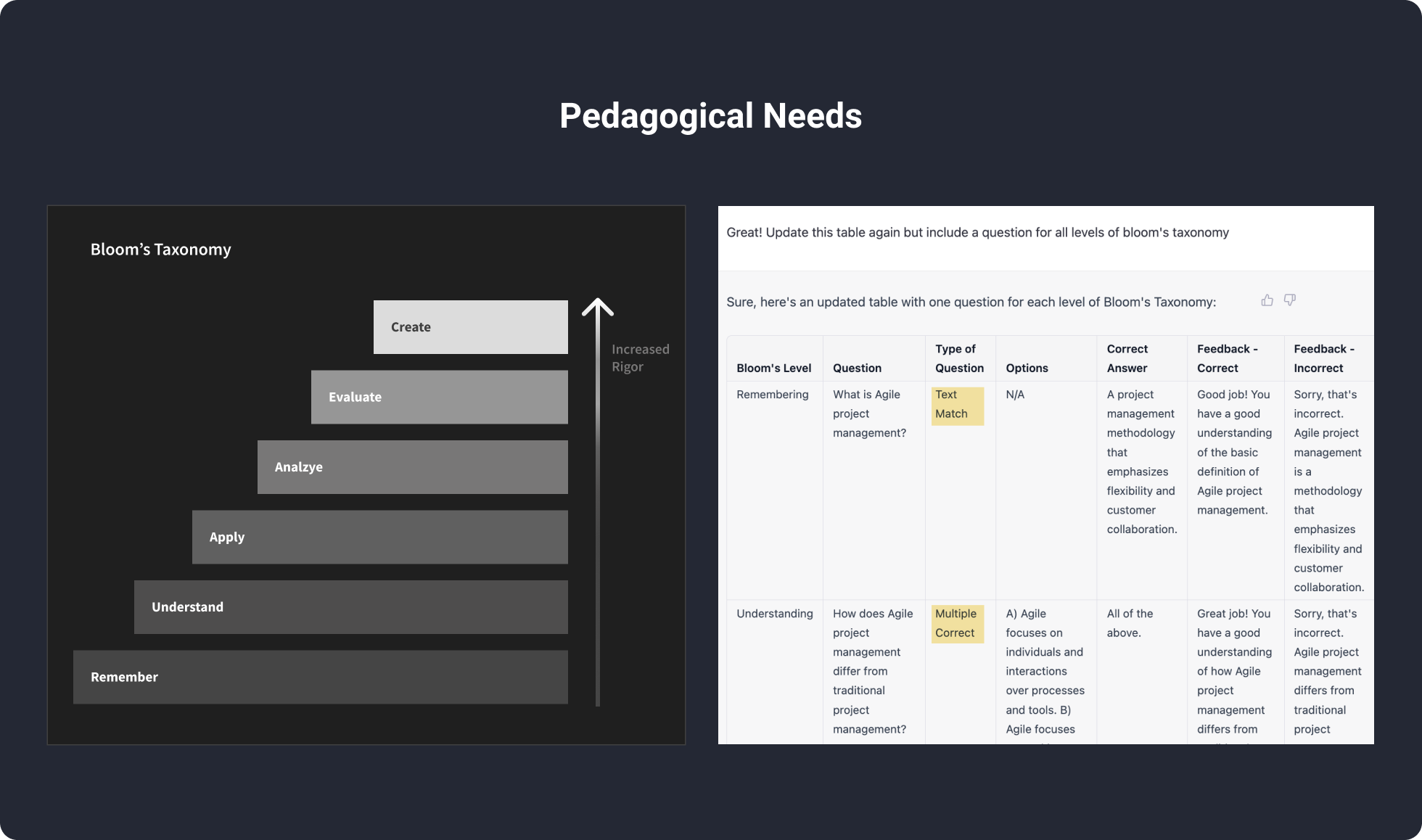

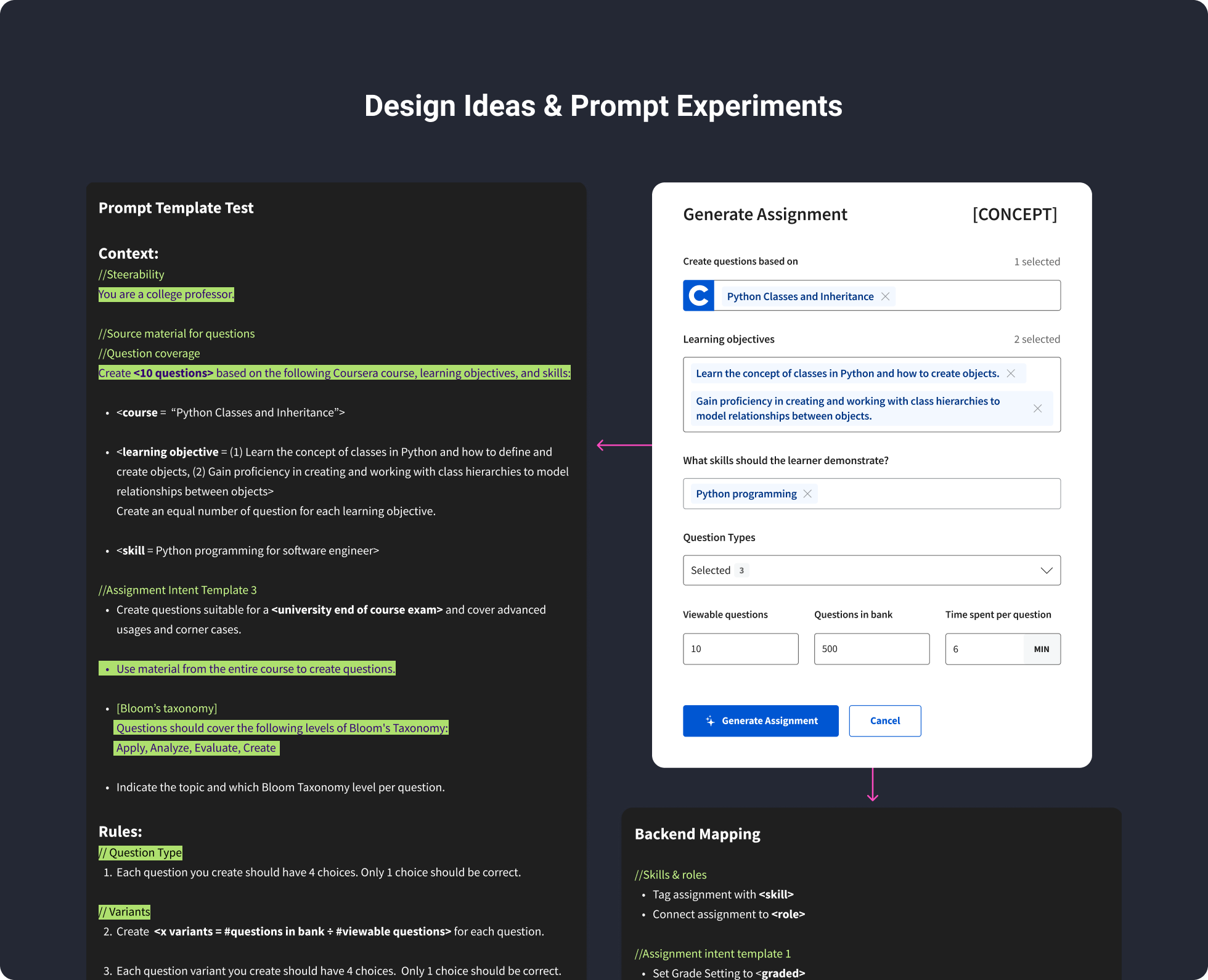

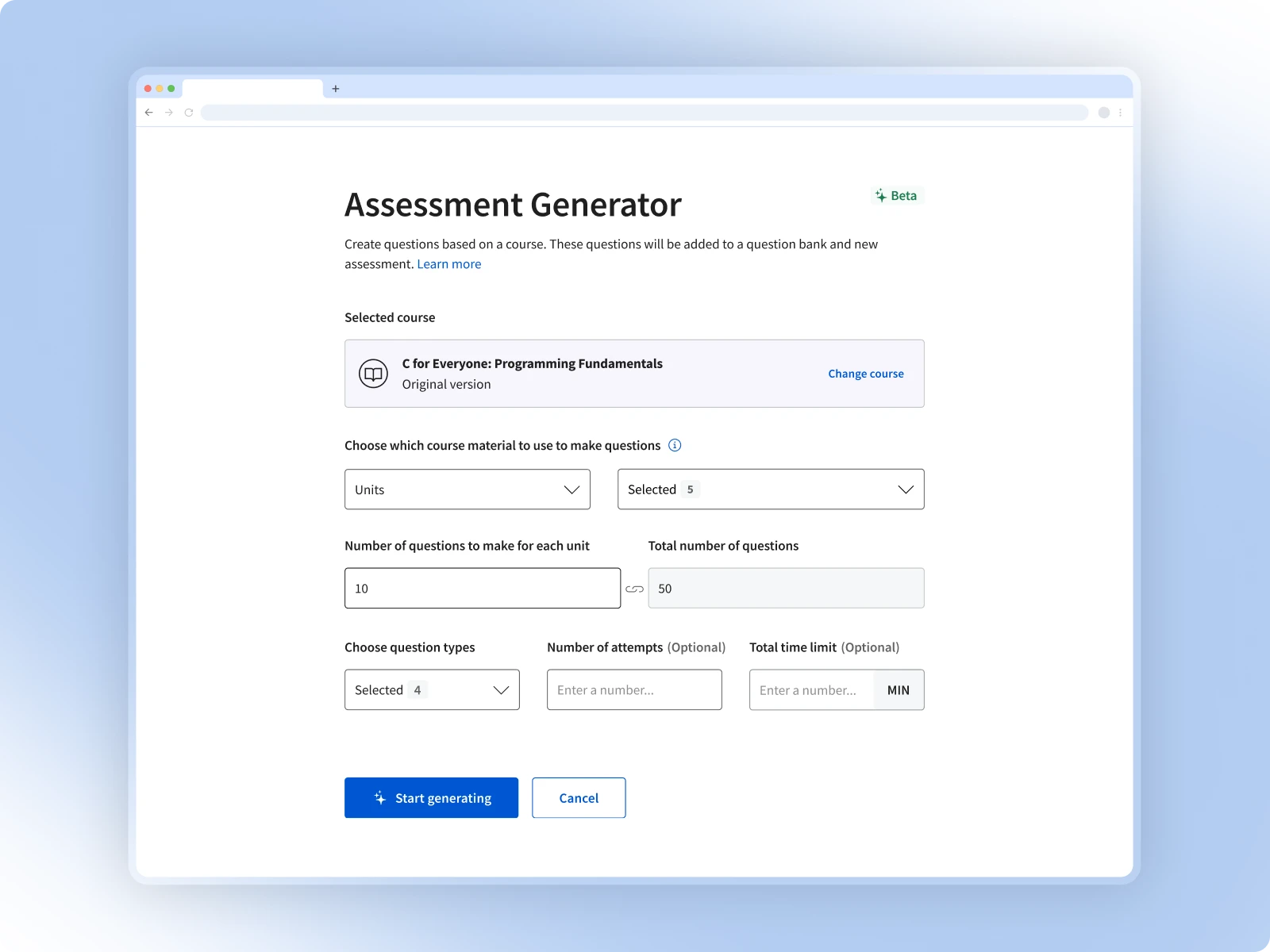

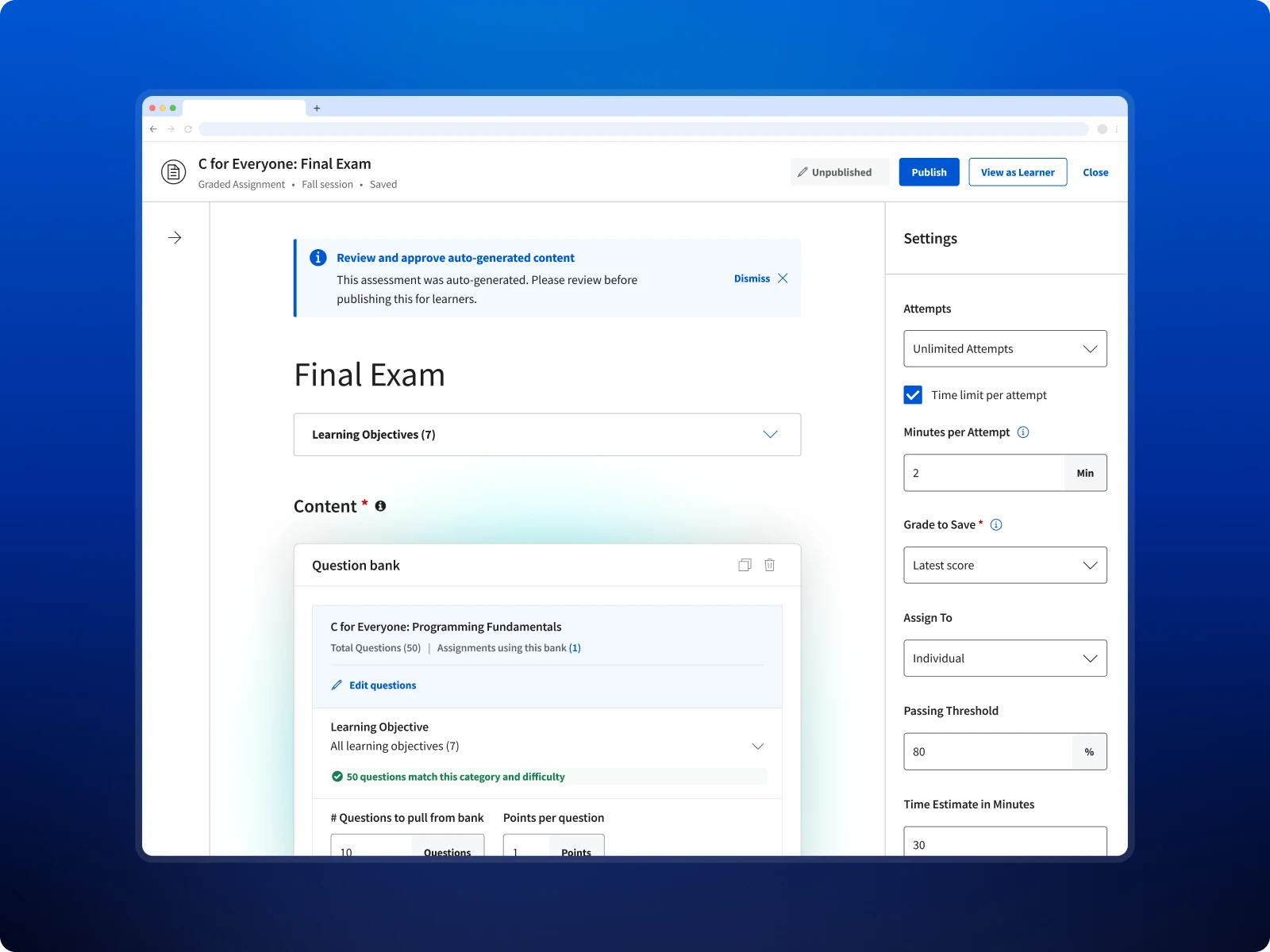

I led design for Coursera’s first AI-powered assessment tool, giving educators a fast way to create rigorous, credit-worthy exams. Within 4 months, 48 institutions adopted the tool, expanding access to university credit in fields where students previously had none.